Synchronize Of Distributed Data Acquisition System using Compact DAQ - (Aerospace)

In the Test and Measurement industry we have a saying “The Data is the Product”. At the end of a test campaign, the critical deliverable of the Data System is just that: The Data. Test Engineers are looking to test their models and refine their engineering through a feedback loop of data produced by your Data System. It’s not just “the data” though; it’s Data Integrity. High-quality data that the engineers can trust - this is how engineering progresses. If the system produces poor signal quality or misaligned signals, what good is that data?

As a Data Systems Engineer specializing in Aerospace applications, I've led the development of JKI's STAR-GOAT Data Acquisition and Control System. From Engine Test Stands, Wind Tunnels, and <redacted>, our team has worked hard to ensure our data meets the demands of these mission-critical test facilities.

As these Facilities grow in complexity, one common design problem we face is dealing with Distributed Systems. Whether it’s a hundred meter long tunnel with sensors scattered end to end, or a high-voltage test facility with fiber optic isolation between measurement zones, or integrating with external systems (cameras, PLCs, 3rd-party hardware), the need for synchronized data across these distributed systems is undeniable. Today I want to share how we solved this using network time synchronization protocols to achieve sub-microsecond precision across distributed systems.

The Challenges of Distributed Synchronization

When you're working with a single CompactDAQ chassis or PXI system, NI has made synchronization increasingly straightforward over the years. The DAQmx driver handles most of the complexity under the hood, and NI provides solid examples for sharing a reference clock across the backplane and distributing a start trigger. If you understand the basics of how clocks and backplane routing work, single-chassis synchronization is a solved problem. However, the moment your system needs distributed capabilities, you're venturing beyond these built-in conveniences, and new challenges emerge

The Traditional Approach Hits Physical Limits

For synchronized measurements across that 100-meter wind tunnel, the traditional approach would be running cables:

- Clock distribution cables to share your 10 MHz reference

- Trigger cables to ensure simultaneous start

- Analog cables for sensor harnessing throughout the facility

The reality is less forgiving:

Cable length limitations: Clock signals degrade significantly over long runs, introducing phase shifts that destroy timing precision.

Installation complexity: Routing shielded cables through active facilities is expensive, often physically impossible, and can create safety hazards.

Signal integrity: Long cable runs introduce noise, reflections, and timing skew that can actually be worse than having no synchronization at all.

The Network Alternative

The obvious alternative is leveraging existing network infrastructure - after all, everything is already connected via Ethernet. However, standard Ethernet introduces its own timing challenges: variable latency, jitter, and no guaranteed delivery times. Network packets can take anywhere from 1ms to 100ms to traverse the network depending on traffic conditions.

What We Need

For distributed measurement systems, we need a solution that combines the timing precision of shared hardware clocks with the flexibility of network-based communication. The synchronization must be precise (sub-microsecond accuracy), reliable, scalable, and compatible with existing network infrastructure.

This is exactly what modern network time synchronization protocols were designed to solve.

Network Time Synchronization Options: Picking the Right Tool

When you dive into network timing solutions, you'll encounter a soup of acronyms: PTP, TSN, IEEE 1588, IEEE 802.1AS, SNTP. Let's break down what actually matters for distributed measurement systems.

SNTP (Simple Network Time Protocol)

- What it is: Basic network time synchronization - essentially "what time is it?" over the network.

- Accuracy: 10-100 milliseconds

- Best for: Timestamping log files and general system housekeeping

IEEE 1588 / PTP (Precision Time Protocol)

- What it is: Intelligent time synchronization that compensates for network delays using timestamped message exchanges

- Accuracy: Sub-microsecond (with proper hardware support)

- Pros: High precision, widely supported

- Cons: Multiple "profiles" create compatibility chaos - automotive PTP differs from power grid PTP differs from telecom PTP

- Best for: Integrating with existing equipment that only supports specific PTP profiles

IEEE 802.1AS

- What it is: A single, standardized PTP profile selected by the Ethernet standards committee

- Accuracy: Sub-microsecond

- Pros: Eliminates profile compatibility issues, hardware optimized for this specific implementation

- Cons: Newer standard with limited legacy equipment support

- Best for: New NI-based systems and CompactDAQ distributed setups

TSN (Time Sensitive Networking)

- What it is: IEEE 802.1AS time synchronization plus deterministic networking features (guaranteed bandwidth, scheduled traffic)

- Accuracy: Sub-microsecond timing with deterministic data delivery

- Pros: Complete solution for control systems requiring both precise timing and guaranteed network performance

- Cons: Requires expensive TSN-capable switches, overkill for measurement-only applications

- Best for: Control systems requiring coordinated actions, not just synchronized measurements

Our Approach

For our implementation, we needed sub-microsecond precision across multiple CompactDAQ chassis without the expense of TSN-capable network infrastructure. This led us to IEEE 1588/PTP - a solution that could deliver the timing accuracy we needed while working with standard network equipment.

Implementation: Achieving Sub-Microsecond Synchronization with PTP

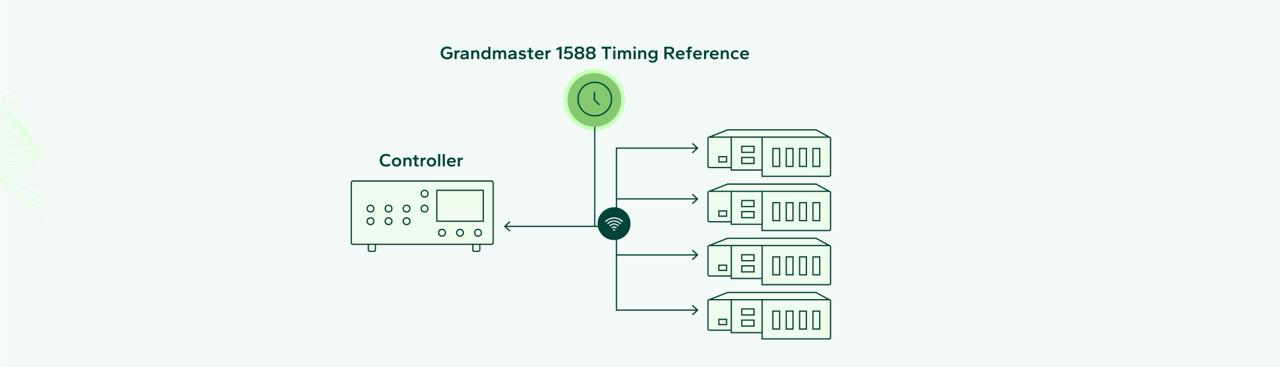

Our goal was straightforward: synchronized acquisition across a PXI system and distributed cDAQ chassis. The implementation broke down into three main steps: synchronize the PXI to IEEE 1588, synchronize the cDAQs to IEEE 1588, then coordinate everything together.

Hardware Configuration

PXI System:

- PXI-8881: Real-time Linux controller running our main application

- PXI-6683H: Timing synchronization board that generates a reference clock from the IEEE 1588 network signal

- PXI-6674T: OCXO synchronization module for routing the reference clock to the PXI backplane

Note: The 6674T is only needed if your PXI chassis lacks the timing and sync option. PXI-1095 chassis can be ordered with onboard OCXO, providing a clock reference input directly.

Distributed DAQ:

- 5x cDAQ-9185: Our distributed chassis with built-in TSN capabilities

Hardware Setup

Timing Reference: We used an IEEE 1588 Grandmaster as our authoritative time source. This can be a third-party device, or the 6683H can provide the Grandmaster signal if no exists on the network.

Clock Routing: Connect the CLK OUT from the 6683H to either the chassis CLK IN (if you have timing and sync option) or the 6674T CLK IN.

Software Implementation

Step 1: Configure cDAQ for IEEE 1588 This one-time setup can be run independently of your main application. We used NI's provided sync example:

C:\Program Files\NI\LVAddons\nisync\1\examples\instr\niSync\Time-Based\Getting Started\Enable and Disable Time References.vi

Step 2: Configure PXI Timing Cards Initialize IEEE 1588 sync on the PXI system. The 6683H will automatically detect an existing Grandmaster or become one if none exists on the network. At this point your 6683H will be producing a CLK OUT signal, disciplined to the 1588 signal, that is physically wired to your CLK IN reference (either on the 6674T or the back of the Timing & Sync Chassis). The next step is to tell your chassis to use this external clock as its reference.

Checkout the NI Article Synchronizing FieldDAQ and TSN-Enabled Ethernet cDAQ Chassis to a PXI System for an example of how to set this up in code.

Step 3: Synchronize DAQmx Tasks to the Reference Clock

- PXI: Once the PXI is receiving the IEEE 1588 reference, PXI_CLK100 and PXI_CLK10 become disciplined to this external reference. Configure your DAQmx tasks to use the PXI clock as their reference.

- cDAQ: No additional code needed! Once configured for IEEE 1588, all DAQmx task clocks are automatically synchronized.

The Start Trigger Challenge

Here's where things get interesting and where we found traditional NI sync techniques fall short. Despite having synchronized clocks, we discovered that tasks weren't starting at precisely the same time. Given that we were using IEEE 1588 PTP rather than one of the higher-tier protocols like TSN, this wasn't entirely surprising.

We adjusted our approach: instead of trying to achieve perfectly simultaneous starts, we focused on knowing exactly when each task started. With accurate t₀ timestamps and proper clock synchronization, we could achieve the same end result - perfectly correlated data.

PXI Timestamp Strategy: We used the NI Sync API "Enable Time Stamp Trigger" to monitor the actual start trigger signal. Here's the process:

- Route your master task's start trigger to a spare line

- Use the timestamp trigger to monitor this line

- After the task starts, use "Read Trigger Timestamp" to get the exact hardware timestamp

This hardware timestamp proved significantly more accurate than the software timestamp from DAQmx Read Waveform functions.

cDAQ Timestamp Strategy: TSN-capable cDAQs excel at time tracking. Our approach:

- Read the current time

- Add a small buffer period

- Command the task to start at a specific timestamp

Notably, we found that traditional DAQmx trigger timing methods were unreliable for this precision level.

Key Takeaways

- Use hardware timestamps: NI Sync Trigger Timestamp provides hardware-level accuracy for PXI tasks

- Leverage cDAQ time awareness: Use FirstSampleClk for the “When” and I/O Device Time for the “Timescale” for cDAQ timing

- Don't trust software timestamps: DAQmx Waveform t₀ values aren't sufficient for distributed system synchronization

- Adjust expectations: Perfect simultaneous starts aren't necessary - accurate start time knowledge achieves the same result

With these techniques, we consistently achieved sub-microsecond synchronization across our distributed measurement system.

Validation & Challenges: Proving It Works (And What Almost Broke It)

Achieving sub-microsecond synchronization is one thing - proving it works in real-world conditions is another. Here's how we validated our implementation and the gotchas that nearly derailed the project.

Validation Method: The Simultaneous Signal Test

To prove our synchronization worked, we fed identical test signals to inputs on different chassis and compared timestamps of detected events in post-processing. We generated a square wave, split it to both PXI and remote cDAQ systems, then used edge detection to identify when rising edges occurred on each system.

Results: We consistently achieved synchronization within 500-800 nanoseconds across chassis separated by hundreds of meters of network infrastructure.

Key Challenges and Solutions

Network Sync Monitoring: We implemented continuous monitoring of IEEE 1588 sync status using NI-DAQmx properties. When sync was lost, the system would alert the user that sync was lost & when it was reestablished. This proved essential during long-duration tests where brief network interruptions could otherwise go unnoticed.

The UTC Offset Trap: Some network devices had a 37-second UTC offset that wasn't automatically compensated by NI Sync timestamp functions. Our timestamps were systematically offset between devices despite perfect relative timing. We implemented a UTC offset compensation to normalize all timestamps to a common reference.

The Double Precision Time Bomb: Our time tracking slowly drifted during extended testing due to floating-point precision limitations. We were tracking elapsed time by continuously adding Δt (t = t₀ + Δt + Δt + Δt...), which introduced cumulative timing skew over thousands of samples due to imprecisions in the double data type. The fix: switch to multiplication (t = t₀ + Δt × sample_number), eliminating cumulative error completely.

Extended Duration Testing: We validated performance over multiple durations - short tests showed consistent sub-microsecond performance, but extended tests (8+ hours) revealed the time tracking issue, and overnight tests (12+ hours) exposed the importance of robust network monitoring and automatic recovery.

Key Takeaways

- Validation is critical: Don’t assume synchronization works without rigorous testing

- Monitor continuously: Network-based timing can fail silently

- Watch for systematic offsets: UTC compensation may be required in mixed environments

- Floating-point arithmetic matters: Cumulative timing calculations must account for precision limitations

The result was confidence that our distributed system could deliver timing precision comparable to traditional shared-clock approaches, with added network-based flexibility.

Conclusion

Distributed measurement systems no longer require a trade-off between timing precision and installation flexibility. Using IEEE 1588/PTP with NI's TSN-enabled CompactDAQ systems, we achieved sub-microsecond synchronization across distributed chassis while leveraging standard network infrastructure.

The key insights from our implementation:

- Network-based timing can match traditional shared-clock precision when properly implemented

- Hardware timestamping and continuous sync monitoring are essential for reliable operation

- Validation testing reveals critical implementation details that theory doesn't cover

For test engineers facing the challenges of distributed measurements - whether it's a sprawling wind tunnel, high-voltage isolation requirements, or multi-vendor system integration - network time synchronization opens up architectural possibilities that were previously impractical.

The technology is mature, the hardware support is robust, and the implementation, while requiring attention to detail, is well within reach of any experienced LabVIEW developer. The data integrity your test campaigns depend on no longer needs to be constrained by cable length limitations.

Ready to distribute your next measurement system? The timing has never been better.

Enjoyed the article? Leave us a comment